Decision tree is one of the most famous & ancient classifiers when we categorize data in machine learning . Currently , In the industry , there are 3 Algorithms supporting the decision tree developing : ID3, C4.5 and CART. To increase the accurancy and avoiding overfitting , Of course we are also doing the pre-pruning & post-pruning normally. And the parameter-tuning when we generate the tree is also very intriguing when doing hands-on generation.

However, do you really know when to use the Decision tree? There are so many classifiers in the current world. Neural network possibly can do anything for you if you read articles nowadays and deep learning can precisely classify & predict as well . Wait. what if your sample size is not so big . Can you still form a good neural network ? Not really I shall say . There are still some reasons for this ancient classifier to exist in current world:

Advantages

Simple to understand and to interpret. Trees can be visualized.

Requires little data preparation. Other techniques often require data normalization, dummy variables need to be created and blank values to be removed. Note however that thismodule does not support missing values.

The cost of using the tree (i.e., predicting data) is logarithmic in the number of datapoints used to train the tree.

Able to handle both numerical and categorical data. Other techniques are usually specialized in analyzing datasets that have only one type of variable. See algorithms for more information.

Able to handle multi-output problems.

Uses a white box model. If a given situation is observable in a model, the explanation for the condition is easily explained by Boolean logic. By contrast, in a black box model (e.g., in an artificial neural network), results may be more difficult to interpret.

Possible to validate a model using statistical tests. That makes it possible to accountfor the reliability of the model.

Performs well even if its assumptions are somewhat violated by the true model from which the data were generated.

Disadvantages

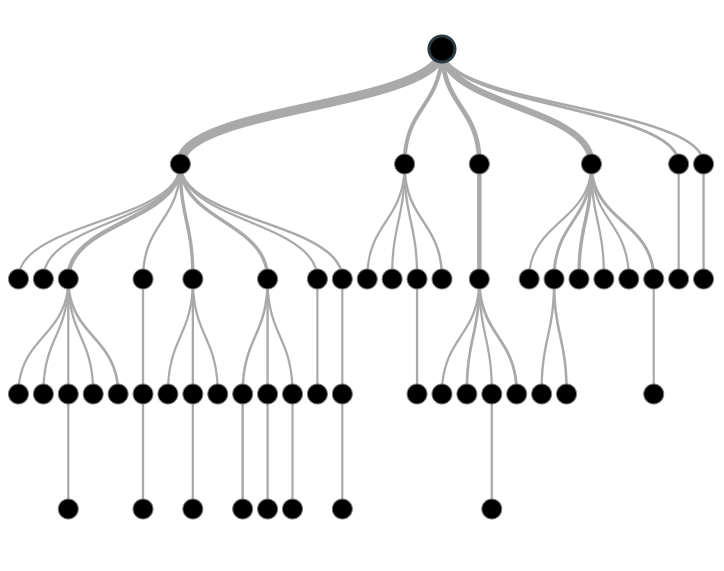

Decision-tree learners can create over-complex trees that do not generalize the data well. This is called overfitting. Mechanisms such as pruning (not currently supported), setting the minimum number of samples required at a leaf node or setting the maximum depth of the tree are necessary to avoid this problem.

Decision trees can be unstable because small variations in the data might result in a completely different tree being generated. This problem is mitigated by using decision trees within an ensemble.

The problem of learning an optimal decision tree is known to be NP-complete under several aspects of optimality and even for simple concepts. Consequently, practical decision-tree learning algorithms are based on heuristic algorithms such as the greedy algorithm where locally optimal decisions are made at each node. Such algorithms cannot guarantee to return the globally optimal decision tree. This can be mitigated by training multiple trees in an ensemble learner, where the features and samples are randomly sampled with replacement.

There are concepts that are hard to learn because decision trees do not express them easily, such as XOR, parity or multiplexer problems.

Decision tree learners create biased trees if some classes dominate. It is therefore recommended to balance the dataset prior to fitting with the decision tree.

No comments:

Post a Comment